Have you seen the heated Facebook discussions where our colleagues suggest the percentage of a Sourcer’s research work that will be soon automated – anywhere from 5% to 80%? Some say that we are in a dying profession. The future will show, but I am currently with the “5%” crowd. I do agree that some other jobs will change or go away as machines “replace” people. Some other types of jobs will be created too. But the Sourcer jobs and functions are not going away.

What is Machine Learning? Simply speaking, we have two types of “objects” – for example, job descriptions and resumes. We feed this type of info into the computer:

– Resume1 matches JobDesription1

– Resume2 matches JobDesription1

– Resume1 does not match JobDesription2

– Resume2 matches JobDesription2

– Resume3 does not match JobDesription2

(etc.)

Here Resume1, JobDesription1, etc. are just blobs of data (representing the content of resumes and job descriptions). Inside the computer, the data looks like this: 00110011100011000010… It’s hard to imagine that a human would learn anything from staring at strings of 1s and 0s. But research and real-life applications show that, in selected situations, having been “fed” enough data, the computer learns and can start performing matching on its own.

From testing a number of recruiting matching systems, I can say that we are currently far away from automatic matching resumes to jobs correctly. As part of a research project for a client, my partners and I reviewed a sample of 100 resumes matched to several job descriptions by three leading software systems. Our study revealed that all three performed equally badly. In most “matching” cases we could guess a reason for matching (such as a keyword), but only about 3% of the matches sounded right. (Of course, we are picky, but still…)

There are good reasons though why ML-based systems are not matching resumes against jobs well (yet?).

One very simple reason, that I haven’t seen discussed much, is the difficulty of parsing the data in recruiting matching systems. People are bad at writing both job descriptions and resumes. (Know what I am saying?) The machine needs to do some heavy deciphering; it can use some other data, such as a dictionary with term synonyms, but the task is hard. It could be that it requires more data for machines to learn than most current matching systems have. (LinkedIn would be in a position to do matching, given the amount of data, but they are behind others).

When working recruiting matching happens – in certain areas and industries first – we will be facing a new challenge. Many are worried about machines potentially learning discrimination and matching algorithms needing to be audited or combined with some “anti-bias” algorithms. But even more broadly, when an ML-based hiring works fine on its own, sometime in the future, how will we get some human understanding of the reasons for their decisions? As an article from MIT Technology Review says that we need “ways of making techniques like deep learning more understandable to their creators and accountable to their users. Otherwise, it will be hard to predict when failures might occur”.

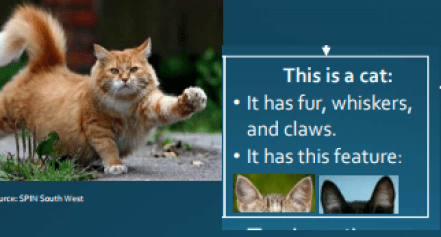

There are interesting efforts to make machines “explain themselves” at DARPA. I copied the image above from a DARPA research paper, which I recommend reading if the subject interests you.

Making machines tell us “what” they learn is a fascinating research topic. It is also of practical importance for the future of those areas in our industry where we do apply automation. And, learning and controlling what automated systems do will continue to require our human presence.

Comments 4

Irina, have you done much testing with Google Jobs? By far, that is the technology that most excites me, that I’m aware of, although really it is my perception more than anything tangible.

Author

Randy, I think they have ways to go. But I do think that Google’s huge stored amount of information (plus, their clever Engineers) puts them in a great position to achieve some kind of “matching” that works. We’ll see.

“And, learning and controlling what automated systems do will continue to require our human presence.”

And let me add “patience” to the end of that sentence. Sourcing requires human patience; patience to sort through all the data and all the noise, now more than ever.

Hi Irina, for sure, for NOW, we will not be replaced. But in the future, I believe we will. Pls do not forget, this is a JOURNEY. There are many issues and many challenges that we are yet to tackle, but do not be fooled that it will remain this way! Technology is growing exponentially – It takes less and less time to learn and fix. It is my understanding from what I read in several places that researchers are trying to understand that “black box” and where some errors occur. And I am sure they will. Let’s look at the big picture and prepare for what’s coming!